Artificial intelligence data centers are deploying liquid cooling solutions to improve thermal management as high-performance computing (HPC) workloads increase. One of the most common options is direct-to-chip cooling, which leverages liquid's high thermal transfer properties to remove heat from individual processor chips.

Air-assisted liquid cooling offers a strategic advantage for businesses aiming to harness artificial intelligence (AI) and maintain a competitive edge. Combining efficient room and direct liquid cooling methods can help organizations lower energy costs, boost performance, and meet AI data center demands.

Overview of data center liquid cooling

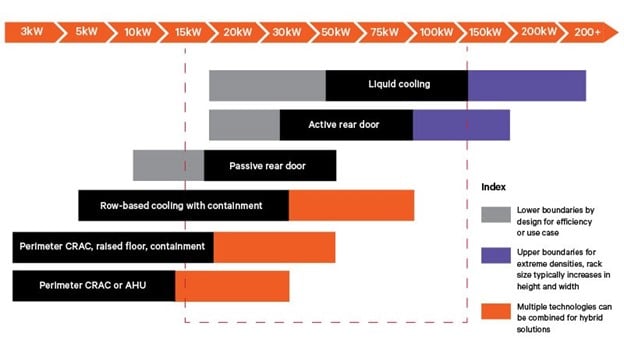

The widespread adoption of HPC services, such as AI, machine learning (ML), and data analytics, causes a fast rise in chip, server, and rack densities and power consumption. As rack densities rise to 20 kilowatts (kW) and quickly approach 50 kW, the heat levels of HPC infrastructure are pushing the capabilities of traditional room cooling methods to their limits. Moreover, there is increasing global pressure on data centers and other enterprises to continuously reduce power consumption . To meet these demands, data center operators are investigating their liquid cooling options (see Figure 1).

Liquid cooling leverages the higher thermal transfer properties of water or other dielectric fluids to dissipate heat from server components efficiently. This solution is 3,000 times more effective than using air cooling alone for HPC infrastructure, whose heat levels outpace the capabilities of traditional methods. Liquid cooling includes various techniques for managing heat in artificial intelligence data centers.

HPC cooling options

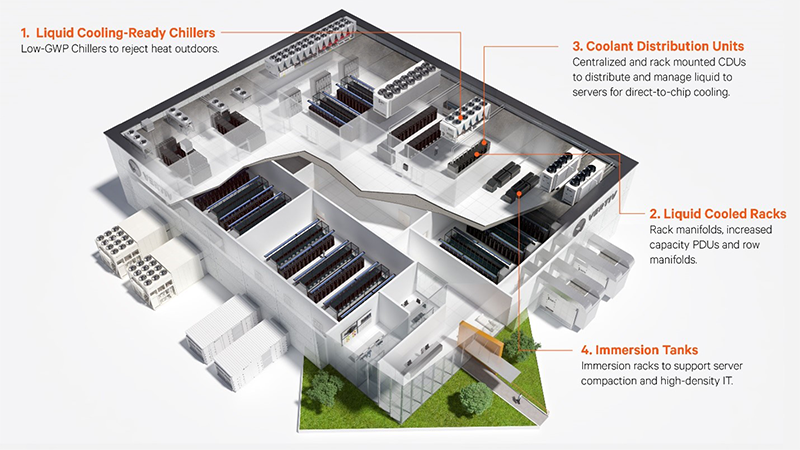

Data center operators are taking three approaches to liquid cooling: building entirely liquid-cooled data centers, retrofitting air-cooled facilities to support liquid-cooled racks in the future, and integrating liquid cooling into existing air-cooled facilities. Most operators will likely choose the latter approach to increase capacity, meet immediate business needs, and achieve a quick return on investment. Liquid cooling options for HPC infrastructure include rear door heat exchangers (RDHx), direct-to-chip cooling, and immersion cooling.

Learn more

Liquid cooling options for data centers

Data center operators are evaluating liquid cooling technologies to increase energy efficiency as processing-intensive computing applications grow.

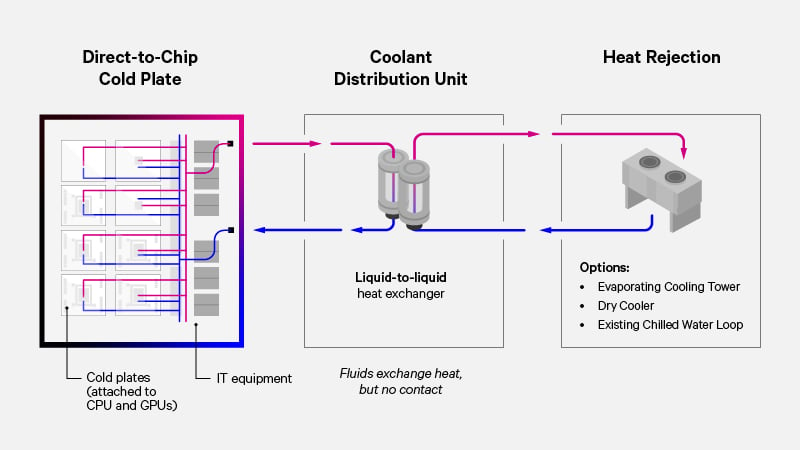

Understanding direct-to-chip cooling

Direct-to-chip cooling is an advanced thermal management technology primarily employed in data centers using HPC hardware to dissipate heat efficiently. This method involves circulating a safe dielectric liquid coolant directly over the surfaces of computer chips via cold plates to efficiently absorb and remove heat (see Figure 2). This can keep processors' temperatures at optimal levels, regardless of load and external climates.

Direct-to-chip cooling improves energy efficiency, minimizes the risk of overheating, and enhances overall system performance. HPC data center operators consider this approach an efficient data center cooling method since cooling is applied directly to the heat-generating components of processors and other hardware. This technology is especially critical as data centers evolve to handle increasing computational demands and strive for higher density and efficiency.

Basic components of direct-to-chip cooling systems

Direct-to-chip cooling dissipates heat directly from the chip, allowing data centers to support higher rack densities while maximizing energy efficiency. This liquid cooling solution has several components that work seamlessly. The components of direct-to-chip cooling include the following:

- The cooling liquid is composed of a dielectric compound or fluid specially engineered for direct-to-chip

- A tube (or circulator) that moves the liquid

- A plate where the liquid can pass through

- A thermal interface material that conducts heat from the source to the plate

How does direct-to-chip cooling work?

Direct-to-chip cooling draws off heat through a single-phase or two-phase process. These methods enhance the efficiency of cooling systems in artificial intelligence data centers.

Single-phase direct-to-chip cooling

Single-phase direct-to-chip cooling involves using a cold plate to transfer heat from server components like CPUs and GPUs. A cooling fluid absorbs the heat and flows through the coolant distribution unit (CDU), where a heat exchanger transfers it to another medium for outdoor rejection (see Figure 3). Non-conductive coolants reduce electrical risks, enhancing system safety and reliability.

Fluid selection is determined by balancing the fluid's thermal capture properties and viscosity. Water delivers the highest heat capture capacity but is often mixed with glycol, which reduces heat capture but increases viscosity to enhance pumping efficiency. These systems can also use dielectric fluid to mitigate the damage from a leak; however, the dielectric fluid has a lower thermal transport capacity than the water/glycol mixture.

Two-phase direct-to-chip cooling

With two-phase cold plates, a low-pressure dielectric liquid flows into evaporators, where the heat generated by server components boils the fluid. The resulting vapor carries the heat away from the evaporator and transfers it outside the rack for effective heat rejection.

Benefits of direct-to-chip cooling

Calculating for the efficiency brought by direct-to-chip cooling, the technology is gaining momentum for the continuous learning and innovation it provides the industry:

Increased reliability and performance: Direct-to-chip cooling and other liquid cooling solutions minimize the risk of overheating and maintain uniform, lower operating temperatures, which is crucial for maintaining the reliability and longevity of HPC hardware and avoiding performance degradation.

Greater system design and deployment consideration: Direct-to-chip cooling can be seamlessly integrated into existing server designs, minimizing disruptions to operations and streamlining the deployment process.

Ready scalability: Liquid cooling allows more processors to be housed in a smaller physical footprint and eliminates the need for expansions or new construction. By providing more effective thermal management, direct-to-chip cooling facilitates at-need scalability, making it easier to grow operations without compromising performance and running services.

Reduced total cost of ownership (TCO): In their report, American Society of Heating, Refrigerating, and Air-Conditioning Engineers (ASHRAE) found that data centers utilizing both air and liquid cooling can reduce TCO compared to solely air-cooled systems. This reduction is due to higher density, increased utilization of free cooling, and improved performance per watt.

Read our eBook on HPC Cooling

DCD Cooling Transformation

Datacenter Dynamics reports cooling accounts for up to 40 percent of the data center's total energy bill. With this in mind, the importance of finding a best fit solution should not be understated.

Preparing for data center liquid cooling deployment

A one-size-fits-all AI cooling solution doesn't exist, as businesses have distinct computing needs. To address their unique requirements, modern data centers, including those focused on artificial intelligence can start deploying direct-to-chip cooling, or other HPC cooling methods, by performing the following steps:

Determining cooling requirements: IT and facility teams must decide how to allocate resources for new AI or high-performance computing workloads to meet current and future needs over the next one to two years, whether by converting a few racks at a time or dedicating an entire room.

Measuring the thermal footprint: Cooling teams need to identify the AI configuration, assess non-standard requirements, and evaluate current airflow while addressing gaps between new heat loads and cooling limits.

Assessing flow rates: Teams may choose to upgrade cooling solutions based on the lifecycle of IT equipment, especially if upcoming hardware refreshes require additional capacity for next-generation chips.

Exploring solutions for direct-to-chip cooling deployment in data centers

IT and facility teams can start deploying direct-to-chip cooling or other data center liquid cooling solutions after installing dedicated infrastructure that creates a fluid cooling loop enabling heat transfer between facility and secondary circuits and fluid other than facility water to be used for cooling (see Figure 4).

Liquid-to-liquid CDU

CDUs provide controlled, contaminant-free coolant to direct-to-chip cold plates, as well as rear door heat exchangers and immersion cooling systems. Teams with chilled water access can use a liquid-to-liquid CDU to provide a separate cooling loop liquid cooled IT equipment, keeping it isolated from the facility's main chilled water system. Opting for this solution allows them to choose the fluid and flow rate for the racks, such as treated water or a water-glycol mix.

Unlike traditional comfort cooling systems that often shut down after hours and during off-seasons, the chilled water system should operate continuously for successful deployment. Prioritizing water quality through filtration options is also essential when using existing chilled water systems. Additionally, liquid-to-liquid CDUs require installing pipes and pumps to connect to the facility's water, which may affect deployment timelines.

Liquid-to-air CDU

Liquid-to-air CDUs provide an independent secondary fluid loop to the rack, dissipating heat from IT components even without chilled water access. The heated fluid returns to the CDU and flows through heat exchanger (HX) coils. Fans blow air over these coils, dispersing the heat from the data center. The existing air-cooled infrastructure then captures this heat and expels it outside, allowing data centers to continue using traditional room cooling methods with direct-to-chip cooling.

Liquid-to-air CDUs can speed up liquid cooling deployment by utilizing existing room cooling units for heat rejection. This option requires minimal modifications to connect water pipes to building systems, occupies less space and has lower installation and initial costs compared to liquid-to-liquid CDUs. However, liquid-to-air CDUs have limited cooling capacity, which can be a significant consideration for artificial intelligence data centers.

Liquid-to-refrigerant CDU

Liquid to refrigerant CDUs deliver liquid directly to the chip and utilize refrigerant-based condensers for direct expansion (DX) heat rejection. This approach maximizes the existing DX infrastructure while enhancing liquid cooling capacity where needed. It allows data centers to quickly deploy HPC cooling without needing onsite chilled water, enabling modular setups without completely overhauling the existing cooling infrastructure.

Liquid-to-refrigerant CDUs operating with pumped refrigerant economization (PRE) heat rejection technology provide cooling based on ambient temperatures, reducing energy use. The internal components chill the secondary fluid network to deliver high-density cooling directly to the server cold plates.

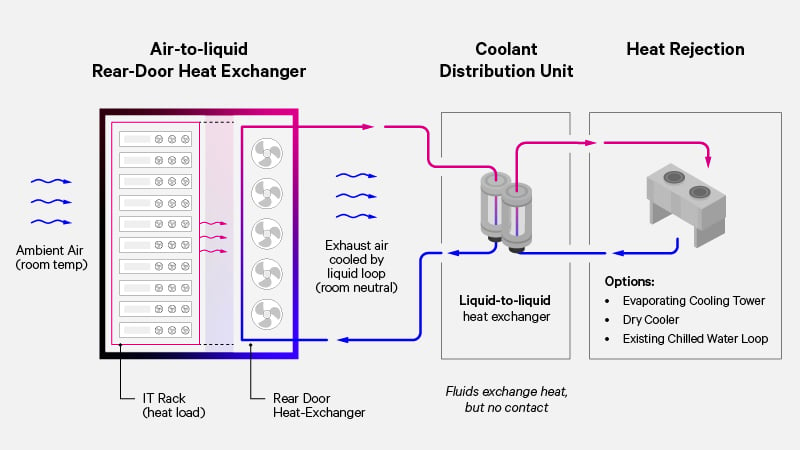

Rear door heat exchanger (RDHXs)

Data centers can implement RDHXs as a first step toward high density cooling. When adequately sized, RDHxs can be used with existing air-cooling systems, without requiring significant structural changes to white space. They can also eliminate the need for containment strategies (see Figure 5). Passive units work well for loads from 5 to 25 kW, while active RDHXs provide up to 50 kW in nominal capacity and have been tested up to 70+ kW in some cases.

Considerations for successful direct-to-chip cooling integration

Implementing direct-to-chip cooling technologies involves a careful assessment of various challenges that can affect their integration. Key considerations include compatibility with current infrastructure, effective fluid management, and addressing equipment capacity limitations to ensure successful deployment.

Fluid distribution

Designing safe and efficient fluid distribution systems with minimal leak risks, along with proactive leak detection, is essential for successful deployment. IT and facility teams should verify chemical composition, system temperature, pressure, and fittings to prevent leaks or failures. Implementing quick disconnect fittings and shutoff valves enhances serviceability, facilitates fitting disconnection, and enables prompt leak intervention. Furthermore, the design of critical infrastructure components, particularly for CDUs, is vital. These technologies provide precise control over fluid volumes and pressure to mitigate the impact of any leaks.

Leak detection and intervention

A comprehensive leak detection system improves the safety and reliability of liquid cooling setups by giving timely alerts to potential issues, which helps reduce downtime and prevent damage to equipment. Indirect detection methods monitor changes in pressure and flow, while direct methods use sensors or cables to accurately locate leaks. Properly setting up these systems is crucial; reducing false alarms maintains operational efficiency while still allowing for the detection of real leaks that need urgent attention.

Working closely with infrastructure partners is important to customize the system for specific applications, maximizing the effectiveness of both manual and automated intervention strategies to protect high-density configurations over 30 kW.

Fluid management

Fluid management involves tailored services to meet the coolant needs of liquid cooling systems. These services include contamination removal, air purging, coolant sampling, quality testing, adjustments, and environmentally friendly disposal. By collaborating with top coolant suppliers, Vertiv’s fluid management service ensures optimal performance and reliability of Vertiv liquid cooling systems.

Read more:

How to implement liquid cooling at existing data centers

As demand for artificial intelligence (AI) and machine learning (ML) models surges, you're likely evolving your cooling strategy and exploring new options. Create your roadmap for adopting liquid cooling with this technical guide, featuring practical strategies for adopting liquid cooling for 1MW IT loads.

Choose your path to high-density

Direct-to-chip cooling technology is transforming data centers by addressing the intense heat loads associated with HPC workloads, such as AI, machine learning, and big data analytics. With the increasing demand for processing power, more efficient cooling solutions are essential for maintaining optimal performance.

Take the next step towards high-density computing with confidence. No matter where you start or where you aim to go, Vertiv can customize solutions to transform your data center’s cooling capabilities.