The demand for resilient data center infrastructure to support advanced workloads has surged as AI becomes more prevalent. This guide aims to explore the cost impact of AI on data center design, build and operations, and evolving strategies for efficiency and optimization.

Data center costs include construction expenses, hardware procurement, energy consumption, cooling systems, networking infrastructure, maintenance, and operational overheads. The cost of building and operating a data center can vary significantly depending on location, facility size, power density, and environmental considerations.

Rising demand for AI workloads

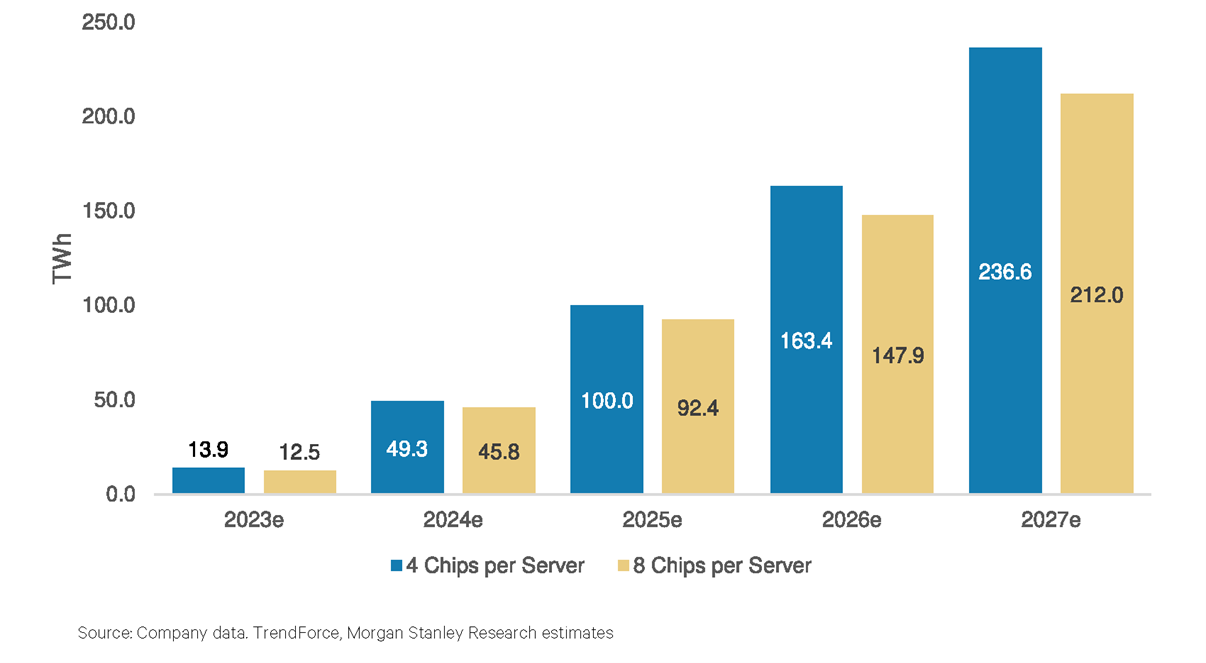

The rising demand for AI workloads, such as machine learning, deep learning, and Generative AI, require substantial computational power, significantly increasing the need for data center resources. According to Morgan Stanley, global data center power usage is expected to triple from about 15 TWh in 2023 to around 46 TWh in 2024. The surge in power usage is driven by the deployment of NVIDIA’s Blackwell chips, the full utilization of Hopper GPUs, and increased shipments from AMD and custom silicon from major tech companies. These components are essential for supporting the growing computational demands of AI workloads, highlighting just a few reasons for the power surge.

Figure 1. : Global projected Gen AI power demand in Morgan Stanley’s base forecast.

Source: Morgan Stanley Research - Powering GenAI: How Much Power, and Who Benefits?

Data center models

Constructing a new data center for AI involves various types, including hyperscale, tier III, tier IV, and modular facilities. Hyperscale centers offer vast resources for large-scale AI tasks, while tier III and IV centers enable high availability. Modular centers provide flexibility for changing AI needs. Each type requires careful planning, coordination, and investment to address AI infrastructure challenges effectively.

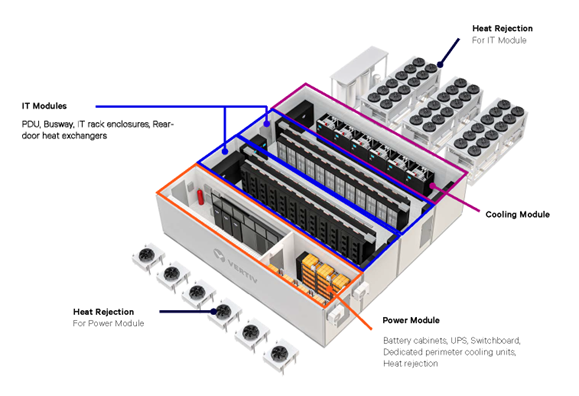

Modular data center

Modular data centers offer organizations greater flexibility, scalability, and cost efficiency than traditional brick-and-mortar facilities. By deploying prefabricated modules and containerized solutions, organizations can rapidly deploy data center infrastructure, scale resources on demand, and minimize upfront capital expenditure.

Own data center vs. Colocation

The decision to build and operate versus leveraging colocation facilities depends on various factors, including cost, control, and scalability. While owning data centers offers greater control and customization options, colocation facilities provide cost-effective solutions for organizations seeking to minimize upfront capital expenditure and operational overheads.

Enterprise data center

Enterprise data centers, designed to support mission-critical applications and workloads, are pivotal in enabling digital transformation initiatives. With redundant power and comprehensive disaster recovery capabilities, they provide a resilient environment for hosting AI workloads and sensitive data assets. They also allow greater control over security, compliance, visibility, customization, and data sovereignty while minimizing latency and regulatory risks compared to public cloud environments.

Figure 2. This Vertiv™ solution is a 1MW enterprise data center operating at 50kW per rack when all modules are bayed together. Find more Reference Designs for diverse AI deployments on vertiv.com/ai-hub.

Building and operating costs

The surge in AI demand necessitates a closer look at the costs associated with building and operating these facilities. Location, facility size, power density, and environmental considerations play a crucial role in determining these costs. The following sections explore key data center infrastructure components and their impact on operational efficiency and expenses.

Powering AI

Traditional data centers, which typically had power densities of 2-4 kW per rack, now often exceed 40 kW per rack due to the high processor utilization and power-hungry nature of GPUs and TPUs used in AI applications. This surge in power demand is expected to triple by 2030, increasing from 3-4% of total US power demand today to 11-12%.

Considering these rising demands, the financial implications are also significant. The average cost to support 1 watt (W) of IT capacity in data centers ranges from $9 to $10.50 in North America. Additionally, the overall average year-on-year cost increase across the 2024 index is nine percent, up from six percent in 2023. This index reflects the current average cost per watt to build in 50 key data center locations globally.

To mitigate financial pressures, innovative solutions that enhance efficiency are crucial, as they directly translate to cost savings. Vertiv's high-density power solutions support up to 100kW per rack, accommodating the intense power requirements of AI workloads while optimizing space and energy use.

Additionally, Vertiv’s large UPS systems efficiently handle rapid power fluctuations, enabling stability and reducing downtime costs. The Vertiv™ DynaFlex Battery Energy Storage System (BESS) enhances reliability by leveraging the capabilities of hybrid power systems that include solar, wind, hydrogen fuel cells and other forms of alternative energy. Integrated with an Energy Management System (EMS), it offers scalability from 100kW to 100MW.

Figure 3. The Vertiv™ DynaFlex Battery Energy Storage System (BESS) supports up to 100MW and integrates seamlessly with Vertiv™ DynaFlex EMS for optimal energy management. It also reduces or eliminates the need for diesel generators by utilizing a hybrid "always-on" power approach.

Cooling AI

According to Data Center Knowledge, AI workloads can increase data center energy use by 43% annually, leading to higher heat loads. As AI tasks become more intensive, the energy required for processing rises, generating more heat. Efficient cooling systems are essential to manage these increased heat loads and prevent overheating

When looking at the infrastructure required for deploying the high-performance computing that generative AI demands, we find liquid cooling solutions, such as those offered by Vertiv, provide a compelling alternative. Liquid cooling technologies, including immersion cooling and , excel at managing the substantial heat generated by AI applications.

Data center operators must be ready to turn to liquid cooling to stay competitive in the age of generative AI. The benefits of liquid cooling, such as enabling higher efficiency, greater rack density, and improved cooling performance, make it an essential approach for organizations that want to incorporate advanced technologies and meet the cooling needs of the resulting high-density workloads.

Figure 4. This diagram illustrates the evolution of thermal management systems, comparing traditional air cooling with advanced liquid cooling solutions. Liquid cooling offers enhanced efficiency and heat dissipation, making it ideal for high-density and AI workloads.

Data center cost computation

Cooling these systems adds to the operational costs. Traditional air cooling systems are less efficient and may struggle with high heat loads, leading to increased energy consumption and higher costs. In contrast, liquid cooling systems, while having higher upfront costs offer better efficiency, and lower operational costs over time. They can reduce energy consumption by up to 30% compared to air cooling. For example, if a data center uses 1,000 MWh annually for cooling AI workloads, switching to liquid cooling could save approximately 300 MWh of energy, translating to significant cost savings. The average cost of electricity for data centers is around $0.10 per kWh. This could result in annual savings of $30,000.

Data center space

The following analysis is based on insights from the CBRE Global Data Center Trends 2024 highlight the pressing challenge of limited space for data centers. The combination of limited power availability, supply chain challenges, and AI demand underscores the critical need for efficient space optimization to manage costs and maintain operational efficiency.

Global trends

In 2024, global data center real estate costs are significantly influenced by power availability and rental rates. Markets with ample power are attracting more investment, while power-constrained regions like Singapore are experiencing soaring rental rates, reaching $315-$480 per month for a 250-500 kW requirement. Additionally, vacancy rates are declining globally due to strong demand, with Singapore's vacancy rate at a near-record low of 1%. Supply chain issues and construction delays further exacerbate the data center capacity shortage, leading to higher costs and pre-leasing of new facilities.

North American trends

In North America, data center inventory grew by 24.4% year-over-year in Q1 2024, despite power supply issues. This intense demand is driving up rental rates, with the average asking rate increasing by 18% year-over-year, the sharpest rise on record.

Integrated solutions

Monitoring and management

These tools provide real-time visibility into system operations, enabling proactive maintenance and optimization. Advanced analytics and automation enhance operational efficiency, reduce downtime, and improve resource management. For instance, monitoring platforms can track thousands of data points per minute, ensuring optimal operation and quick response to any issues. Additionally, intelligent controls and centralized management systems increase equipment availability, utilization, and efficiency.

Deployment and maintenance services

Proper installation and regular servicing of components help prevent failures and extend the lifespan of the infrastructure. Comprehensive deployment and maintenance services are available for retrofits and new builds of any scale. From rack solutions to modular data centers, Vertiv supports the entire AI journey, from test pilots to full-scale AI data centers. Costs vary by complexity and scale.

Redundant systems

Implementing redundant systems in data centers, such as UPS, backup generators, redundant cooling units, and automated failover systems, involves considerable initial costs. However, the cost benefits are substantial.

According to Forbes, the cost of downtime for large businesses has soared to an average of $9,000 per minute in 2024. This significant expense underscores the importance of investing in robust redundancy and preventive maintenance to minimize the risk of outages and allow continuous operation. The costs can be even more staggering for higher-risk industries like finance and healthcare, potentially exceeding $5 million per hour.

Labor cost

Labor costs account for 40-60% of data center expenses, including salaries, training, benefits, and overheads. As operations become more complex and automated, investing in skilled personnel is crucial for managing AI workloads and optimizing infrastructure. The shortage of qualified staff is a notable challenge, with 71% of data center operators concerned about staffing issues. This highlights the need for training and development to support advanced AI infrastructure.

Upgrade cost

Around 30% of a data center’s budget is allocated to upgrades and modernization efforts to maintain competitiveness and meet evolving business requirements. Data centers typically plan for hardware refresh cycles every 3-5 years. Key expenses include IT equipment, power and cooling infrastructure, software upgrades and energy costs. Regular maintenance and proper disposal of outdated equipment also contribute to overall upgrade costs.

Navigating the complexities of data center costs

Data center costs represent a multifaceted challenge for organizations seeking to leverage AI technologies and digital services in the modern era. From construction and equipment procurement to energy consumption and operational overheads, data center operators must navigate a complex landscape of cost considerations and optimization strategies to achieve efficiency, reliability, and responsible business in their operations. By embracing innovation, implementing best practices, and leveraging cost-effective solutions, data center operators can position themselves for success in the digital age while driving value for their customers and stakeholders.

The AI heat wave is here

Managing power and heat surges in high-performance computing (HPC) is critical to preventing thermal throttling and maintaining efficiency. Traditional cooling technologies may fall short in catering to these needs, necessitating a transformation in power and cooling infrastructure designs.

Unlock new possibilities with scalable, efficient, and resilient solutions to support your business’s growth and innovation. Let us assist you in reimagining your critical infrastructure for the AI era.