As compute demands spiked over the last five years, the thermal management market also grew. According to Principal Analyst Lucas Beran, Dell’Oro Group, this market reached $3.5 billion last year and is projected to reach $6 billion by 2026. This exceptional market growth reflects the fact that, in many cases, new technologies are needed to keep up.

This past October, Ben Coughlin (Colovore), Gerard Thibault (Kao Data), and Don Mitchell (Victaulic) joined me on a panel, hosted by Lucas, at the OCP Global Summit to discuss this topic. Our conversation made clear that liquid cooling is the future of data centers hoping to keep pace with the massive spike in compute demands, especially for colocation. As another OCP panel put it: You’re either already using liquid cooling or you will be in the next 3-5 years.

What’s Driving Data Center Cooling Acceleration?

Over the last 12 years, servers and components have changed significantly. Blades and virtualization, which first surfaced over a decade ago, have significantly increased in density such that today’s compute servers routinely draw 750 watts to 1 kilowatt (kW) per rack unit. Even historically low-density flash storage now draws 400-600 watts per rack unit.

Liquid cooling has been around for as long as there have been computers, but air cooling has dominated the industry because it was a simpler process before the recent huge increase in compute demands. Air cooling still dominates, especially in the United Kingdom, but flexible data center infrastructures that follow OCP guidelines are on the rise. Denser compute and some modern chips that can’t transfer their heat to air have increased consideration and adoption of liquid cooling options. From chassis-level liquid cooling to cold-plate cooling, new data center liquid cooling technologies are driving consideration for and adoption of air/liquid hybrid set-ups.

My colleagues on this OCP panel and I receive calls each week from customers or prospects wanting to understand how to add liquid cooling to an air-cooled data center. Vertiv’s answer: Start with the rear doors, then consider direct chip cooling.

Liquid Cooling Systems Meet High-Density Compute Demands

Liquid cooling has significantly higher thermal transfer properties than air. Data center liquid cooling systems are substantially more efficient and a cost-effective cooling solution for high-density racks.

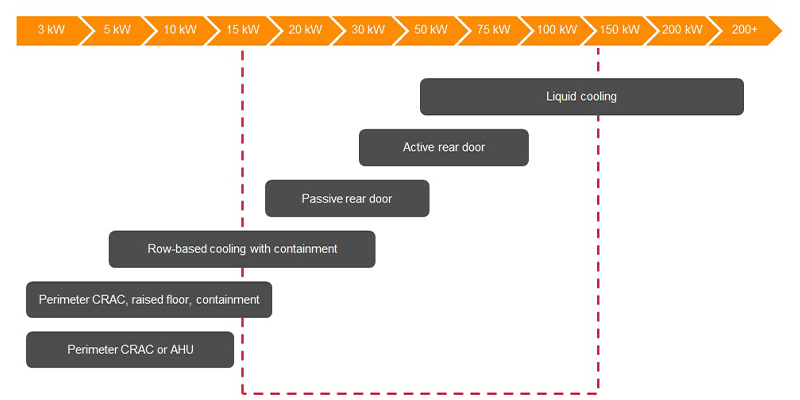

Modern rack power requirements are exceeding 20 kW, and many facilities are looking to deploy racks with requirements of 50 kW or more. The mature technology of rear door heat exchanger liquid cooling systems like Vertiv’s Liebert® DCD can manage densities above 20 kW. These liquid heat exchanger units replace the rack’s rear door. A passive design expels heated air through a liquid-filled, heat-absorbing coil mounted in place of the rear door of the rack. An active design includes fans to pull air through the coils and remove heat from even higher-density racks.

Air-based cooling systems lose their effectiveness when rack densities

exceed 20 kW, at which point, liquid cooling becomes the viable approach

Rear door heat exchanger liquid cooling systems are a great first step on the path toward direct chip cooling. Rear door panels work well in a hybrid liquid/air system with mixed rack densities. My fellow panelist, Gerard Thibault, shared that Kao Data uses this hybrid approach in its 2-megawatt (MW) hall. The company has 2 MW of air cooling and are adding 10 MW of liquid cooling to provide 12 MW of cooling.

Along with its better efficiency and cost-savings, liquid cooling also delivers a more sustainable cooling method. At this Global Summit, OCP announced sustainability as its fifth tenet, alongside efficiency, impact, openness, and scalability. Liquid data center cooling can help companies meet their sustainability goals in the coming years. Unlike air cooling, which continues to work ever harder, the cooling mechanism of a rear door heat exchanger or direct chip liquid cooling solution produces better cooling results with less work, leading to less energy use and fewer carbon emissions. These technologies could also be used together to drive 100% of the heat load into the fluid!

The Look and Sound of a Liquid Cooled High-Density Colocation Center

Are liquid cooled data centers or hybrids much different from air cooled data centers? Colovore’s Ben Coughlin shared his company’s set-up: a cooling tower outside, plus pumps and pipes. Each cabinet has two pipe connections — one to deliver water, one to take it away, and that’s all. They have no chiller and no floor-based coolant distribution units. Its fan-assisted “active” rear doors by Vertiv now work alongside multiple megawatts of direct liquid cooling, although this delivery is limited some by the piping in place. There is very little visual difference. Temperature and sound, however, are noticeable differences.

Large-compute, hybrid-cooled colocation data centers have a comfortable ambient temperature around 70 degrees Fahrenheit (21 C), instead of a cold aisle and a hot aisle like a traditional, air-cooled data center. The higher-computing infrastructure servers and Artificial Intelligence (AI) servers are very loud, but the cooling components add no additional noise. Otherwise, these are perfectly “normal” data halls of standard 45-U cabinets, and customers access their servers the same way.

Airflow in the cabinet is the most significant difference. Network switches are very short and must therefore breathe front to back. Larger power distribution units require “crisp” cabling as racks aren’t lengthening at the same rate as the servers they house. It’s a tight fit inside the cabinet. Careful consideration can easily solve these concerns during design and installation.

Plan Now for the Liquid Cooled Data Center Future

As my colleague Don put it, “Don’t fear liquid cooling; plan for it.” Modern data centers are at a crossroads. Air cooling will be incapable of meeting the hefty demands of high-density compute within the next 5-10 years — barely “tomorrow” in data center time. Now is the time to embrace the coming change and plan for it before that time comes.

I was glad to join Ben, Gerard, Don, and Lucas at OCP’s “Empowering Open” Global Summit to gaze into the future of data center liquid cooling. Companies like ours — Dell’Oro Group, Colovore, Kao Data, Victaulic, and Vertiv — delight in helping our industry innovate and our customers’ projects succeed. You can watch the panel recast on YouTube and visit the Vertiv website to learn more about high-density and liquid cooling solutions.