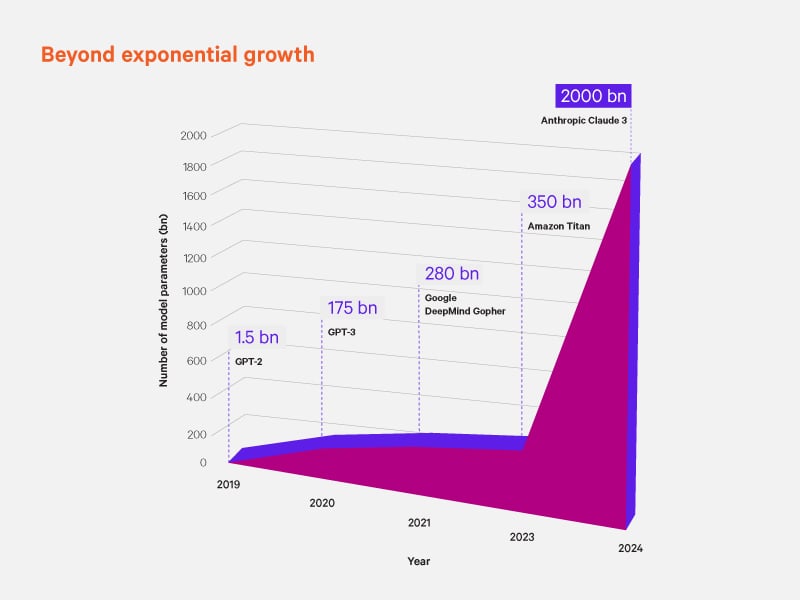

Data center operators and professionals face the monumental task of adapting their systems to keep pace with rapid AI-driven architecture advancements. With AI workloads driving conversations and changes, becoming the primary catalyst for expanding IT load capacity, energy consumption, and heat generation. The push for infrastructure growth and the need to understand the burgeoning demands are critical for staying competitive.

AI's potential to mine data and extract valuable insights is akin to refining precious natural resources. The tools and infrastructures supporting this process must evolve to handle the increased load efficiently and responsibly. One of the most noteworthy trends is the dramatic rise in rack density: Current high rack densities of 50kW per rack are projected to surge to 1MW by 2029. This growth isn't just a random figure; it's a forerunner of the rapid changes already knocking at companies’ and data centers’ doorsteps.

The energy demand challenge

Increased rack density means higher computational power, but also greater challenges in managing heat and energy consumption. Among the biggest physical and system challenges is the integration of traditional air cooling methods with emerging liquid-cooling techniques. These advanced cooling methods are crucial for maintaining system performance and reliability as rack densities increase and can improve energy efficiency as data centers are kept running at optimal conditions without overprovisioning.

Moreover, cooling systems will have to be energized on a continuous basis. The escalating demand for power brought by processor-intensive AI workloads requires always-on power solutions to manage the pulse loads effectively. In essence, scalable power solutions are one of the few proactive measures enabling data centers to handle these power surges without compromising performance or reliability. As the industry learns of the changes and adjustments involved, designing for scalable systems will now entail more collaboration between chip manufacturers, power and cooling makers and vendors, and data centers looking to keep their lead in the market.

“One of our roles today is making what power is there more available and ensuring it is used more efficiently at the data center. You need to make sure you use the power you have effectively.”

Chief Technology Officer and Executive Vice President, Vertiv

Efficient power management will be a linchpin in sustaining AI growth. Data centers must adopt more sophisticated power distribution systems capable of handling higher densities and dynamic loads for data center management. Innovations in renewable energy sources and smart grids will also be crucial, reducing the environmental footprint of these power-hungry operations.

Real-world implications

Current systems are becoming ill-equipped to manage the increasing data intensity or processing power required by sophisticated AI models. The surge in the development pace of specialized hardware, such as AI accelerators and advanced graphics processing units (GPUs), implies that few vendors have the technical knowledge and experience to adapt to the evolving requirements of companies and data centers operating into the future.

Beyond hardware, the critical IT architecture itself must be reimagined. Traditional data centers operate on principles that might no longer be viable in an AI-centric world. New architectural paradigms, such as edge computing and distributed networks, will play pivotal roles in ensuring low latency and high throughput, which are essential for real-time AI applications. This evolution will also necessitate advancements in software frameworks capable of optimizing AI workloads and managing the intricate demands of hybrid environments.

“Things are charging faster now than at any time in the last 30 years. To manage this change we need to work together as an industry to establish best practices and reference designs. IT's key to being prepared for the changes that are already happening.”

Vice President, Global Power, Vertiv

Optimizing IT infrastructure for AI: Insights from our white paper

The implications of AI's advancement in data centers extend beyond technical challenges; they offer unprecedented opportunities for growth and innovation. Done right, organizations can create opportunities to achieve higher efficiency, improved performance, and competitive edges in their respective fields.

Our white paper “AI workloads and the future of IT infrastructure,” offers an analysis of the current trends, expert predictions for future developments, and actionable strategies that can be implemented today. By downloading this white paper, data center professionals, designers, and operators gain access to a wealth of information to help make the hyperscaler, colo, and enterprise IT infrastructure future-ready.

Thriving in the AI revolution requires more than just state-of-the-art technology; it calls for holistic solutions and visionary partnerships. Businesses must choose their solutions wisely, but selecting the right partners is equally crucial. Vertiv has been working closely with the leading chipmakers as a consultant on the rapidly changing critical digital infrastructure landscape, and we can help further enable enterprises that their systems are ready for the technological advancements on the horizon.

Inevitably, AI is rewriting the future of IT infrastructure. Download our white paper today to arm yourself with the knowledge and tools needed to navigate the complexities of AI in IT infrastructure. To learn more about the coming AI-driven changes for critical digital infrastructure, explore the Vertiv™ AI Hub today.