The evolution of artificial intelligence (AI) and machine learning (ML), mainly through technologies like ChatGPT and large language models (LLM), has heightened the demand for high-performance computing (HPC). This shift necessitates data centers to adopt high-density cooling to meet the intense cooling requirements of AI workloads. Traditional air-cooling systems alone can no longer keep up. Consequently, operators are now using liquid cooling solutions which can be 3,000 times more efficient than air cooling.

Air-assisted liquid cooling can improve thermal management for businesses looking to leverage AI and stay competitive. IT and facility teams can start by evaluating their cooling needs, assessing their site, and selecting the appropriate cooling solutions to create an efficient HPC cooling system.

A one-size-fits-all high-density cooling solution doesn't exist. Implementing a data center liquid cooling system requires thorough planning and assessment of an existing facility’s footprint, current cooling methods, workloads, and budget. Here's how to begin.

Determine cooling requirements

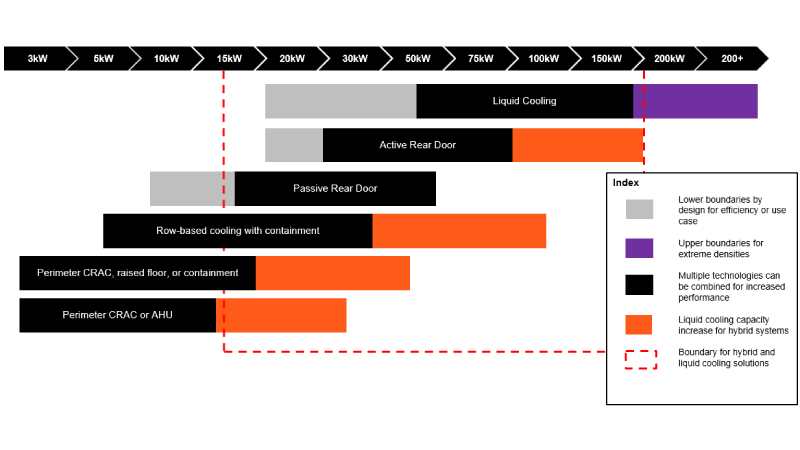

Multi-tenant data centers (MTDCs) and enterprise data centers are increasing rack densities due to the latest AI-capable graphics processing unit (GPU) chipsets. These chipsets now exceed thermal design powers (TDP) of 1,000 watts (W), and are quickly approaching 2,000 W or more. They support cloud and enterprise tasks like deep learning, natural language processing, generative AI, training, and inferencing. As a result, rack densities reach 100 kilowatts (kW) per rack (see Figure 1).

Figure 1. Evolution of thermal management technology with increasing rack density; red boundary highlights conditions for viable data center liquid cooling.

IT and facility teams may have to decide how much space to allot to new AI or high-performance computing workloads to meet current and future demand over the next one to two years. Some are converting a few racks at a time, while others are dedicating entire rooms to these workloads and adding data center liquid cooling systems to support them.

Measure the thermal footprint

With upfront planning conducted, cooling teams can determine the thermal footprint of AI workloads. They might have to identify which configurations will be used and if there are any non-standard requirements. In addition, they can determine current airflow, identifying gaps between new heat loads and cooling limits.

Assess flow rates

Teams can assess flow rates after determining the kilowatts of compute coming in racks. This step is crucial in deploying high-density cooling systems to enable adequate coolant circulates through the racks, effectively removing heat. By assessing flow rates, teams can match the cooling capacity to the exact needs of the computing hardware, preventing overheating and potential damage.

Determining flow rates optimizes energy efficiency, allowing precise control over the cooling system's operation. Teams can evaluate flow rates by calculating the thermal load per rack and assessing the cooling systems' capacity to allow efficient heat removal and optimal operation.

Decide whether to upsize the cooling solution

Depending on how long IT equipment has been deployed, teams may decide whether to upsize the cooling solution or not. For example, building extra cooling capacity into the model to support next-generation chips coming online may be beneficial if the IT equipment will be refreshed soon.

Choose a high-density cooling solution

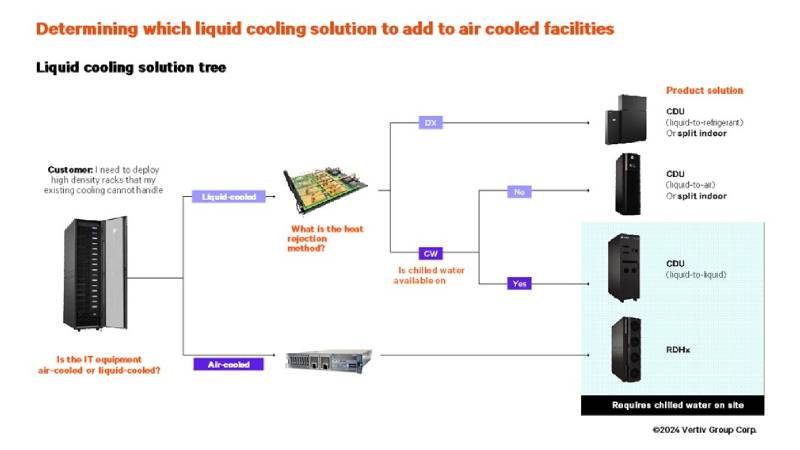

Building a high-density cooling solution involves a strategic approach to managing the intense heat generated by HPC workloads. It requires selecting and implementing technologies (see Figure 2) that can efficiently dissipate heat while minimizing energy consumption.

Figure 2. A solution tree illustrating viable liquid cooling options for air-cooled facilities based on specific conditions.

Consider chilled water onsite

If teams can access chilled water, they can deploy a liquid-to-liquid coolant distribution unit (CDU). For successful deployment in an AI data center, the chilled water system needs to operate 24x7. This differs from traditional comfort cooling systems, which often turn off after hours, on weekends, and during off-seasons.

These CDUs, available as in-rack, in-row, and perimeter models, isolate IT equipment from the facility's chilled water loop with a separate liquid loop to and from the racks called a secondary fluid network (SFN). This approach allows teams to choose the fluid and flow rate for the racks, like treated water or a water-glycol mix. Water quality may also be an issue when deploying CDUs and leveraging existing chilled water systems. IT and facility teams may have to assess filtration capacity or the ability to implement it if needed.

Deploy CDUs without access to chilled water onsite

Some sites may not have access to chilled water systems on site but need to deploy data center liquid cooling capabilities to support business growth. Several technologies are available to facilitate this type of implementation.

Liquid-to-air CDUs provide an independent SFN to the rack and reject heat into the data center air, enabling existing air-cooling systems to capture and reject heat. The SFN is isolated, delivering chilled fluid to liquid-cooled servers in the air-cooled data center environment. Operators must also assess the capacity of existing air-cooling systems.

Use liquid-to-refrigerant CDUs to leverage existing DX condensers

Another option for deploying data center liquid cooling technologies without access to chilled water could be to utilize existing refrigerant-based heat rejection. Liquid-to-refrigerant CDUs effectively combine direct expansion (DX) refrigerant-based heat rejection with data center liquid cooling technologies. This approach maximizes existing DX infrastructure while improving high-density cooling capacity where needed, speeding up HPC cooling deployment regardless of onsite chilled water availability. Additionally, liquid-to-refrigerant CDUs (see Figure 3) enable modular setups without completely overhauling the existing cooling infrastructure

Figure 3. Illustration of an air-cooled data center featuring the Vertiv™ CoolChip Econophase CDU, which efficiently cools high-density rack pods without chilled water. The Vertiv™ CoolPhase CDU operates in three modes: Full Compressor Mode, Partial Economization, and Full Economization.

Operating with pumped refrigerant economization (PRE) heat rejection technology, this liquid-to-refrigerant CDU provides heat rejection based on ambient temperatures, reducing energy use. The internal components chill the SFN to deliver high-density cooling directly to the server cold plates.

Enhance cooling with rear-door heat exchangers

AI data centers can also implement rear-door heat exchangers (RDHXs) to supplement their air-cooling capacity. RDHXs can be used with existing air-cooling systems, don't require significant structural changes to white space, and can eliminate the need for containment strategies when adequately sized. Passive units work well for loads from five to 25 kW, while active units provide up to 50 kW in nominal capacity and have been tested up to 70+ kW in some cases.

Learn more

Create a roadmap for liquid cooling installation by downloading our guide:

Deploying liquid cooling in the data center

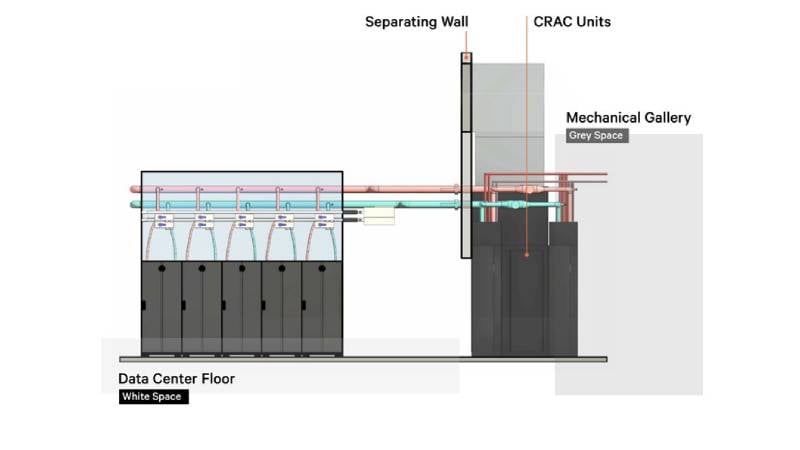

Design mechanical and compute network storage space

Teams will need to decide where to place new high-density cooling systems, such as in a hallway, in the gallery surrounding the perimeter of the white space, or in a deeper concrete trench that runs around the gallery. Regardless of the approach, the mechanical space should have adequate room to install, remove, and replace any systems without disrupting other equipment or operations (see Figure 4).

Figure 4. Illustration of a data center highlighting the grey space, where most MTDC or enterprise facility operations teams prefer to locate liquid cooling equipment, which can be placed with electrical equipment.

The IT, facility, and power team will then work with the design consultant to plan the technical space for placing racks, air cooling distribution, fluid piping, power distribution systems, and monitoring equipment. If the AI data center has a non-raised floor, the team will likely consider a technical design that places power and pipework conduits above the racks, with threaded rods hung down from ceiling joists to provide support.

Create cooling redundancy

CDUs may be orchestrated to work together in a teamwork mode. At an MTDC, as many as eight to 10 CDUs, organized as a set or pod, will support most of the load. Since each unit only carries 10-15 percent of the load, one can easily step up and help more if another unit goes down due to a pump failure or other event. When appropriately sized for demand, CDUs can support up to 80 percent of the cooling demand for IT loads, while perimeter or in-row air cooling provides the remaining 20 percent.

Design and implement controls

Teams can leverage tools such as digital twins to run scenarios and analyze how systems will be deployed. They can then script alarms and alerts, fine-tuning them to expected performance and failure modes and preloading these business rules onto control logic during system installation.

Controls work with sensors, such as humidity and temperature, to provide a view into operational conditions within the rack. Point leak sensors help detect leaks in piping distributing fluids, while rope leak sensors are placed around CDUs to detect leaks. Filter alarms trigger if debris clogs the filter, signaling the need for a service visit.

Plan maintenance

Liquid cooling systems require regular maintenance for efficient and reliable operation. Given the complex nature of liquid cooling systems, data center teams can benefit from full-service liquid cooling solutions that cover ongoing maintenance even after design and installation. Service teams can perform maintenance without taking the entire system down with valves to isolate individual piping sections. Some imperatives involve the following:

- Cleanliness: Contaminants can reduce coolant efficiency, disrupt server operations, and cause system failures. Maintaining a clean environment, even during servicing, is essential to prevent overheating and malfunctions caused by dust and particles. Teams must uphold cleanliness standards to maintain operational efficiency and compliance with regulations.

- Commissioning: Teams evaluate liquid cooling components, fluid flow, efficiency, and functionality under actual conditions to determine if the technology meets performance criteria. This process identifies issues like leaks and fluid distribution problems before operation. Adjustments in flow rates and sensor calibration optimize performance. Service teams test fluid to compare it with initial levels.

- Fluid management: Effective fluid management maintains optimal levels, flow rates, and temperatures, which are essential for heat transfer in data center equipment. It also prevents overheating, performance issues, leaks, and contamination risks.

- Recurring services: Identifying issues early on with regular maintenance can prevent system failures and extend the lifespan of liquid cooling systems, reducing unplanned repairs or replacements. Periodic checks document system conditions and assess compliance with safety standards.

- Spare parts: Having spare parts readily available reduces repair time, minimizes downtime, and allows prompt action on issues, preventing disruptions and extended cooling reductions. Operators can benefit from partnering with a vendor that has a capillary service organization by allowing anomalies to be addressed quickly. Combined with such a service, a spare parts inventory eliminates the need for emergency repairs and expedited shipping.

Harness AI's full potential with high-density cooling

The surge in AI and ML, mainly through the development of foundation models and LLMs, demands significant upgrades in data center infrastructure for high-density cooling to support the heat from HPC workloads. More operators are deploying liquid cooling solutions to air-cooled data centers to harness AI's full potential.

Vertiv offers a comprehensive solution that delivers comprehensive support and expertise to HPC and AI data centers. Whether you need brand-new technologies or retrofit systems for higher densities, we have you covered.

Choose your path to high-density

Your journey to high-density can be a smooth ride. Vertiv offers advanced solutions engineered with industry leaders to power and cool the most challenging deployments. Unleash your AI Evolution with Vertiv today.